本文档基于操作系统 Centos7/Debian 12/Ubuntu22.04 进行部署 Kubernetes 集群

| 服务器IP | 主机角色 |

|---|---|

| 192.168.1.183 | Kubernetes 1(Master、Node) |

| 192.168.1.184 | Kubernetes 2(Master、Node) |

| 192.168.1.185 | Kubernetes 3(Master、Node) |

- 最低节点要求:3 台节点。

- Master 节点支持冗余,一台 Master 宕机,集群仍可正常操作和运行工作负载

要求

- 集群服务器之间网络策略无限制

- 集群服务器之间主机名不能重复

- 主网卡 MAC 地址不能重复【 ip link 查看 】

- product_id 不能重复【 cat /sys/class/dmi/id/product_uuid 】

- kubelet 的6443端口未被占用【nc -vz 127.0.0.1 6443】

- 禁用 swap 内存【 执行 swapoff -a 命令进行禁用,并且 /etc/fstab 中禁用 swap 分区挂载 】

配置HOSTS

在 Kubernetes 集群各节点中添加以下 hosts 信息,将 k8s-master 指向三个 master 节点

1 | cat >> /etc/hosts << EOF |

注意 Kubernetes 集群中每个节点都要添加此 hosts 信息,包括集群后续新增的节点。

安装Docker容器运行环境

Kubernetes 集群各节点均需要操作

1、下载docker安装包

1 | 通过网盘分享的文件:docker-27.3.1.tgz |

2、安装docker

1 | tar -zxvf docker-27.3.1.tgz |

3、创建docker与containerd目录

1 | mkdir /etc/docker |

4、创建 docker 的 daemon.json 文件

1 | cat > /etc/docker/daemon.json <<\EOF |

5、创建 containerd 的 config.toml 文件,并修改配置

1 | containerd config default > /etc/containerd/config.toml |

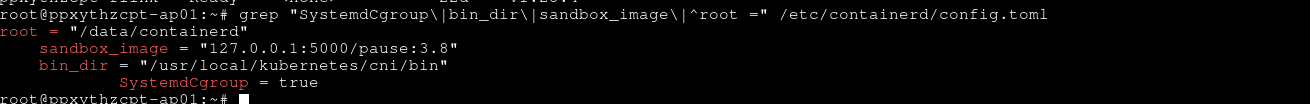

检查 containerd 配置文件

1 | grep "SystemdCgroup\|bin_dir\|sandbox_image\|^root =" /etc/containerd/config.toml |

输出结果如下:

6、配置 docker 的 systemd 文件

1 | cat > /etc/systemd/system/docker.service <<EOF |

7、配置 containerd 的 systemd 文件

1 | cat > /etc/systemd/system/containerd.service <<EOF |

8、启动 containerd 与 docker 并加入开机自启动

1 | systemctl daemon-reload && systemctl restart containerd && systemctl enable containerd |

安装CNI插件

Kubernetes 集群各节点均需要操作

1、下载 cni 插件文件

1 | 通过网盘分享的文件:cni-plugins-linux-amd64-v1.1.1.tgz |

2、创建 cni 文件安装目录

1 | mkdir -p /usr/local/kubernetes/cni/bin |

3、解压 cni 插件到安装目录

1 | tar -zxvf cni-plugins-linux-amd64-v1.1.1.tgz -C /usr/local/kubernetes/cni/bin |

安装 K8S 集群所需命令

安装 crictl/kubeadm/kubelet/kubectl 命令,Kubernetes 集群各节点均需要操作

1、创建命令安装目录

1 | mkdir -p /usr/local/kubernetes/bin |

2、下载命令文件至安装目录

1 | # crictl 文件下载链接,下载完成后上传至目标服务器,然后解压到 /usr/local/kubernetes/bin 目录 |

3、赋予命令文件可执行权限

1 | chmod +x /usr/local/kubernetes/bin/* |

4、配置 systemd 管理 kubelet

1 | cat > /etc/systemd/system/kubelet.service <<\EOF |

5、配置 systemd 管理 kubeadm

1 | mkdir -p /etc/systemd/system/kubelet.service.d |

6、启动 kubelet 并加入开机自启动

1 | systemctl daemon-reload && systemctl restart kubelet && systemctl enable kubelet |

这里 restart 之后无需查看服务状态,后续步骤 kubeadm init 和 kubeadm join 之后该服务会自动拉起

7、配置 K8S 命令所在目录并加入环境变量

Centos

1 | export PATH=/usr/local/kubernetes/bin/:$PATH |

Ubuntu / Debian

1 | export PATH=/usr/local/kubernetes/bin/:$PATH |

8、配置防止后续 crictl 拉取镜像出错

1 | crictl config runtime-endpoint unix:///run/containerd/containerd.sock |

安装环境依赖

Kubernetes 集群各节点均需要操作

1、安装环境依赖 socat/conntrack

服务器支持互联网访问

1 | # centos / redhat 使用 yum 安装 |

不支持互联网访问

1 | # socat 文件包下载链接,下载完成上传至目标服务器(此处使用CentOS 7.9,如依赖不匹配需要重新下载) |

2、检查命令是否缺失

1 | docker --version && dockerd --version && pgrep -f 'dockerd' && crictl --version && kubeadm version && kubelet --version && kubectl version --client=true && socat -V | grep 'socat version' && conntrack --version && echo ok || echo error |

输出 ok 代表正常,输出 error 则需根据错误补全命令

修改内核配置

Kubernetes 集群各节点均需要操作

1、添加内核模块

1 | cat > /etc/modules-load.d/kubernetes.conf <<EOF |

2、加载模块

1 | modprobe overlay |

3、添加内核参数

1 | cat >> /etc/sysctl.conf <<EOF |

K8S 环境镜像准备

Kubernetes 集群各节点均需要操作

1、加载离线镜像

1 | 通过网盘分享的文件:kubeadm-1.25.4-images.tar.gz |

2、启动本地仓库给镜像打标签

1 | docker run -d -p 5000:5000 --restart always --name registry registry:2 |

初始化第一个主节点

仅在 Kubernetes 01 节点操作

1、初始化 master 节点

1.2 命令行方式初始化

1 | kubeadm init --control-plane-endpoint "k8s-master:6443" --upload-certs --cri-socket unix:///var/run/containerd/containerd.sock -v 5 --kubernetes-version=1.25.4 --image-repository=127.0.0.1:5000 --pod-network-cidr=10.244.0.0/16 |

1.3 kubeadm-config.yaml方式初始化

生成 kubeadm-config.yaml 配置文件

1 | cd /usr/local/kubernetes/ |

编辑配置文件

1 | # 修改镜像 |

输出结果示例:

1 | advertiseAddress: 192.168.1.183 |

kubeadm-config.yaml示例

注意修改 advertiseAddress ip地址

1 | apiVersion: kubeadm.k8s.io/v1beta3 |

初始化master节点

1 | # 查看所需镜像列表 |

1.4 尾部输出类似于:

1 | ... |

将此输出复制到文本文件。 稍后你将需要它来将 master 和 node 节点加入集群。

2、修改 nodePort 可使用端口范围

1 | sed -i '/- kube-apiserver/a\ \ \ \ - --service-node-port-range=1024-32767' /etc/kubernetes/manifests/kube-apiserver.yaml |

3、设置配置路径

Centos

1 | export KUBECONFIG=/etc/kubernetes/admin.conf |

Debian/Ubuntu

1 | export KUBECONFIG=/etc/kubernetes/admin.conf |

4、调整当前节点 Pod 上限

1 | echo "maxPods: 300" >> /var/lib/kubelet/config.yaml |

5、允许 master 参与调度

- 在初始化 master 节点后大概要等待1-2分钟左右再执行下方命令

- 执行前需先检查 kubelet 服务状态 systemctl status kubelet,看下是否为 running

1 | kubectl taint node $(kubectl get node | grep control-plane | awk '{print $1}') node-role.kubernetes.io/control-plane:NoSchedule- |

此命令执行后,正确输出为:”xxxx untainted”,如果输出不符,则需稍加等待,再次执行进行确认

6、安装网络插件

1 | cat > /usr/local/kubernetes/kube-flannel.yml <<EOF |

将其他主节点加入集群

需在Kubernetes 02/03 节点上进行操作

1、加入 Kubernetes 集群

1 | kubeadm join 192.168.1.183:6443 --token 58kbiu.fqueeaddjwo6cemv --discovery-token-ca-cert-hash sha256:9490f7a7961fa6277c8f8807ae14c28ef997f332732af90c02ed029214488821 |

- 此命令为在主节点执行 kubeadm init 成功后输出,此处的为示例,每个集群都不同

- 如遗忘的话请参考以下步骤在第一个主节点重新获取:

- 重新生成 join 命令

1 | kubeadm token create --print-join-command |

- 重新上传证书并生成新的解密密钥

1 | kubeadm init phase upload-certs --upload-certs |

- 拼接 join 命令,新增 –control-plane –certificate-key 参数,并将生成的解密密钥作为 –certificate-key 参数值

1 | kubeadm join k8s-master:6443 --token 1b6i9d.0qqufwsjrjpuhkwo --discovery-token-ca-cert-hash sha256:3d28faa49e9cac7dd96aded0bef33a6af1ced57e45f0b12c6190f3d4e1055456 --control-plane --certificate-key 57a0f0e9be1d9f1c74bab54a52faa143ee9fd9c26a60f1b3b816b17b93ecaf6f |

至此,得到了 master 节点加入集群的 join 命令

2、修改 nodePort 可使用端口范围

1 | sed -i '/- kube-apiserver/a\ \ \ \ - --service-node-port-range=1024-32767' /etc/kubernetes/manifests/kube-apiserver.yaml |

3、设置配置路径

Centos

1 | export KUBECONFIG=/etc/kubernetes/admin.conf |

Debian/Ubuntu

1 | export KUBECONFIG=/etc/kubernetes/admin.conf |

4、调整当前节点 Pod 上限

1 | echo "maxPods: 300" >> /var/lib/kubelet/config.yaml |

5、允许 master 参与调度

- 在初始化完当前节点后大概要等待1-2分钟左右再执行下方命令

- 执行前需先检查 kubelet 服务状态 systemctl status kubelet,看下是否为 running

1

kubectl taint node $(kubectl get node | grep control-plane | awk '{print $1}') node-role.kubernetes.io/control-plane:NoSchedule-

- 此命令执行后,正确输出为:”xxxx untainted”,如果输出不符,则需稍加等待,再次执行进行确认

新增工作节点加入集群(如有)

例如 api/db/file 节点或后续继续新增的微服务节点,都是以工作节点加入当前多 master 的 kubernetes 集群

1、加入 kubernetes 集群

1 | kubeadm join 192.168.1.183:6443 --token 58kbiu.fqueeaddjwo6cemv --discovery-token-ca-cert-hash sha256:9490f7a7961fa6277c8f8807ae14c28ef997f332732af90c02ed029214488821 |

- 此命令为在主节点执行 kubeadm init 成功后输出,此处的为示例,每个集群都不同

- 如遗忘的话可以在主节点执行 ku beadm token create –print-join-command 重新获取

2、调整当前节点 Pod 上限

1 | echo "maxPods: 300" >> /var/lib/kubelet/config.yaml |

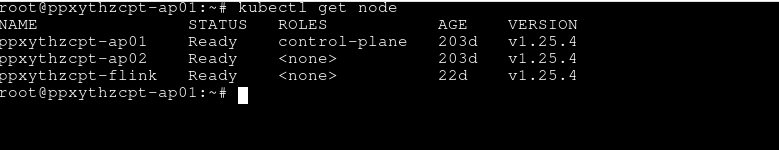

集群状态检查

1 | kubectl get pod -n kube-system # READY列需要是"1/1" |

最终检查如下